Interpolating Normals

When a model is drawn, its normals first arrive in the vertex shader. In the early days of OpenGL, the ambient, diffuse, and specular terms were calculated per vertex rather than per fragment, and the resulting colors were interpolated across the triangle. Per-vertex shading, also known as Gouraud shading, is fast but ugly:

Specular highlights in particular do not render cleanly with per-vertex shading.

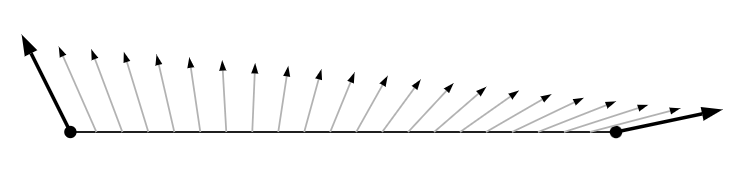

Instead of interpolating the shaded color across a triangle, we want to interpolate the normal. However, there's a problem with interpolating vertex normals. Consider this side profile of normals being interpolated between two vertices:

The issue is that interpolated normals lose their unit length. We can see in the figure that the blended normals are shorter than the vertex normals. They should look like this:

In the fragment shader, we must renormalize the normal, which we do with the builtin normalize function.